Preface

This post describes how I got to create Python scripts in order to graph the VPN user count on Check Point GAIA Gateways to Grafana, using InfluxDB as Time Series Database to store the data. The process had several byproducts, such as Python scripts for

- reading and writing data to CSV files,

- creating and sending emails with attachments and multiple recipients,

- storing data to InfluxDB (to be graphed with Grafana),

- querying InfluxDB and modifying data,

- and creating a Nagios plugin with Python to check for the VPN user count.

Disclaimer: The code displayed here isn’t pretty (didn’t even bother with functions) as it was produced under pressure. In time, polished versions of these scripts will be uploaded in my github, under this repo: https://github.com/itheodoridis/checkpoint-vpn-alerts (give it some time, it’s not there yet). All pieces of code are published under an MIT license.

The story – The ‘what’

It’s April 21rst, 2020, as I begin to write this blog. I had trouble remembering exactly how long I have been working from home in this COVID-19 crisis. This whole thing started about a little over a month ago. I work for an institution whose operations are considered critical so maintaining Operational Availability was a top priority. Everyone on the network team was able to work from home but moving the whole organization to WFH level was a big challenge that naturally had everyone worried (even though it didn’t even come close to something like this post, which described how Cisco IT scaled their VPN infrastructure to cope with the same thing). So the management tasked us on March 19th in the afternoon with providing reports with SPIs (Service Performance Indexes) that could prove the ability of the Remote VPN infrastructure to cope with the users moving to WFH scenario.

“How long do we have?” or “three days for a miracle”

We had three days to come up with a process to gather the data and create reports starting on Monday the 23rd. We were supposed to expose our process to the middle management for approval by Sunday afternoon, while the workforce was preparing for a retreat from the offices to their homes. It was also confided in me that if we could not provide such a process, the upper management might divert the task to outsourced consultants. Now we couldn’t have that, could we?

The ‘how’

The first thing any engineer that has any experience in monitoring would ask is “what are the metrics that provide insight to the VPN user capacity of the system and as a result of the performance in that aspect?”. Naturally the upper management had no idea (that’s technical stuff). So we asked and as it seems, Check Point as a company and their hardware and software have many answers for the same question but there is never a simple one. To be more specific, vpn user capacity doesn’t have to do with the VPN user count itself but with the VPN throughput created by those users. That VPN throughput is not available as a metric unless you own one of the SP grade Platforms, in that case it’s available through SNMP at least, using this OID: .1.3.6.1.4.1.2620.1.48.20.17.0 .

Our own platforms were adequately sized to accommodate more users than our total number of employees and our Internet Feed was significantly lower than the platform VPN throughput limit but the management was still worried and sometimes a picture is worth quite a lot more than a thousand words, so we had to provide proof that the infrastructure was healthy and performant and report about the number of VPN users frequently (meaning that although the stress level got very high to meet with demands under pressure, no actual lives were saved during this process. You get the point).

What about the rest?

Before looking for a more complex solution we searched about what the vendor supports. The vendor has a very nice management software suite with non exportable graphs but none of those had any information about VPN user count. About the usual stats one could gather from the system, like CPU & memory, those were attainable by a number of means:

- Simple screenshots from the management suite (ugly graphs but it can work for an email to the management

- Get data via SNMP and graph them in a suitable software. There is some grief there as to which OID is suitable for CPU and memory, let’s skip that drama, use the Check Point MIB and your software of choice, we use the TIG Stack mostly.

- Some Check Point engineers have created a solution that runs a big script constantly and feeds an influxdb instance which then feeds a Grafana instance. No way or information on how to set this up yourself with only the bits you need, you have to install this on Vmware as an OVA and let it run, querying your gateways. I took a look at the script and figured out that it does a great deal of data gathering for almost every aspect of the systems by running a lot of commands and also querying the local database of the gateways for historical data. We didn’t go for that option. Too much work for too little info. If you are interested, you need to have access to the Check Point forums, the link is here and the software is called CPViewer. I asked about running single command queries for single pieces of information but it seems that it involved running sql queries directly on the gateway on a performance history database which seemed like a very bad idea (crippling the performance of the system while you are trying to measure its performance is always a bad idea).

- You can always run some local CLI commands for some things. For memory the one considered to be the most reliable is CPView (yeah cpviewer has something to do with that..) but it’s like a top equivalent command (linux) so not so easy to get through to the values with a script.. (I tried and failed)

Get the users!

So for CPU & Memory we already had SNMP based graphs and the management suite graphs. About VPN users, we could get a full list of them (who each one was) through the management suite or SNMP but that wasn’t what we needed (we needed how many, plus reporting on who is actually connected and who isn’t could infringe GDPR). Apparently the only way to get the count of VPN users was a CLI command executed at each FW gateway where the VPN is terminated (courtesy of my teammate T.T.):

fw tab -t userc_users -sThe result was a string that could be parsed through regex. I checked across all cluster members and the value was the same, no matter which device was active. So what came naturally to my mind, after having doubled a bit with automating simple stuff with Check Point GAIA gateways, was… Netmiko! (GAIA-based devices are supported). I didn’t really need Text-FSM or NTC-Templates this time (simple regex would work fine). I took upon the task of creating the Python scripts for my team. My workmate K.D. helped me with CSV handling as she had done that before.

Which path to take? All of them!

But getting the values with a script was not enough, and we were under pressure to create reports.. So the data needed to travel. If I could make a script that would gather the counter values and then graph to our TIG stack, that would be great! But I didn’t know how to do that yet. So I thought about three separate courses of action:

- Write something quick and dirty that would just email the number to the middle management. That would cover the “I need to know now!” need. But I didn’t even know how to make a python script create and send an email over MS-Exchange (problem #1).

- Create a nagios plugin written in Python that could get the value of VPN Users, compare it to warning and critical thresholds, create PERF data and return both the status (along with text/value) and the PERF data. This could cover both the need to graph the values (like with Nagios Graph or similar solutions) and provide notification in a scalable way when thresholds are triggered but this approach had other difficulties to overcome: Although I had some python examples I could base myself on and had written custom Nagios plugin in the past in bash and perl, I still had to test this one thoroughly and get python 3.6+ support on my nagios system, which sadly was still running on old OS version (it will be upgraded soon, but I could not really initiate an OS and possible also Nagios upgrade while under pressure for a single piece of information. At the same time the Nagios infrastructure would be critical for our services monitoring while working from home, so I could not risk it becoming useless after a failed upgrade.

- Create a little more complex python script based on the first option that would gather values on a CSV file and run it with cron, then another one that at regular intervals would use the values on the CSV to create an excel sheet with a graph for the VPN users count (along with the max value as provided by the gateway), and then send the file to a list of recipients via email. Except not yet having yet done the script for option 1, I had a few additional issues to research, like writing and reading a CSV file (problem #2, not so hard to be honest), create excel sheets and graphs from CSV with Python (problem #3, a little more difficult for my level, but I knew where to look) and create an email with attachments and send to multiple recipients over MS-Exchange with Python (problem #4, also difficult for my level plus I didn’t know where to look).

I presented all options to my team and the middle management and we all decided to go with options 1 and 3, starting with the simplest one (option no2 would be my next task). I really wanted to go straight for a solution with Influxdb and Grafana, but I didn’t have a choice at that point..

I started on the afternoon of Thursday the 19th of March. We had until Saturday night (the 21rst of May) to come up with a final solution on how to report this so that it could be forwarded to the upper management. We would agree on a course of action on Sunday morning and be ready for War by Monday the 23rd on our guard posts!

On the first day..

So the first try was a simple one. Get in, get the value, get out, print value. Naturally the script would not run interactively, I am just including the interactive version for your convenience (plus I wouldn’t really give you the access credentials and details right?

#!/usr/bin/python3

from netmiko import ConnectHandler, ssh_exception

from paramiko.ssh_exception import SSHException

from getpass import getpass

import subprocess

import sys

#get credentials

user = input('username:')

passwd = getpass()

hostname = input('ip address:')

#define connector

fwext = {

'device_type': 'checkpoint_gaia',

'ip': hostname,

'username': user,

'password': passwd,

}

#try to connect

try:

net_connect = ConnectHandler(**fwext)

except SSHException as e:#replace with netmiko exception

print("Can't connect to device {},\n{}".format(hostname, e))

sys.exit(1)

except ssh_exception.NetMikoTimeoutException as e:

print("Timeout for device {},\n{}".format(hostname, e))

sys.exit(1)

except ssh_exception.NetMikoAuthenticationException as e:

print("Invalid Credentials for device {},\n{}".format(hostname, e))

sys.exit(1)

#send the command to the firewall and extract value

output = net_connect.send_command("fw tab -t userc_users -s")

lines = output.split('\n')

for line in lines:

if 'NAME' in line:

continue

vars = line.split()

vals = vars[3]

peak = vars[4]

print ("Current Remote Users: ",vals)

print ("Peak number of users:", peak)

#disconnect

net_connect.disconnect()Ok, that works. What about sending the data with an email and writing to the .csv file?

#!/usr/bin/python3

from netmiko import ConnectHandler, ssh_exception

from paramiko.ssh_exception import SSHException

from getpass import getpass

import subprocess

import sys

import smtplib

import time

import csv

import os

#define csv filename based on the current day

timestr = time.strftime("%Y%m%d")

filename = "vpnusers_"+timestr+".csv"

#define credentials

user = 'the_firewall_user'

passwd = 'the_firewall_password'

hostname = 'the_firewall_ip_address'

#define connector

fwext = {

'device_type': 'checkpoint_gaia',

'ip': hostname,

'username': user,

'password': passwd,

}

#try to connect

try:

net_connect = ConnectHandler(**fwext)

except SSHException as e:#replace with netmiko exception

print("Can't connect to device {},\n{}".format(hostname, e))

sys.exit(1)

except ssh_exception.NetMikoTimeoutException as e:

print("Timeout for device {},\n{}".format(hostname, e))

sys.exit(1)

except ssh_exception.NetMikoAuthenticationException as e:

print("Invalid Credentials for device {},\n{}".format(hostname, e))

sys.exit(1)

#send the command to the firewall and extract value

output = net_connect.send_command("fw tab -t userc_users -s")

lines = output.split('\n')

for line in lines:

if 'NAME' in line:

continue

vars = line.split()

vals = vars[3]

peak = vars[4]

print ("Current Remote Users: ",vals)

print ("Peak number of users:", peak)

#disconnect

net_connect.disconnect()

timestr = str(time.strftime("%H:%M %d/%m/%Y"))

recipients = "recipient1@domain,recipient2@domain,recipient3@domain"

TO = recipients.split(',')

SUBJECT = 'VPN users report'

TEXT = "Date and Time: {}\nCurrent Remote Users: {}\nPeak Number of users: {}".format(timestr, vals, peak)

# exchange Sign In

exchange_sender = 'exchange_user_address'

exchange_passwd = 'exchange_user_password'

#define exchange server details

server = smtplib.SMTP('exchange server', exchangeport)

server.ehlo()

server.starttls()

server.login(exchange_sender, exchange_passwd)

#create mail body

BODY = '\r\n'.join(['To: %s' % TO,

'From: %s' % exchange_sender,

'Subject: %s' % SUBJECT,

'', TEXT])

#try to connect and send email

try:

server.sendmail(exchange_sender, TO, BODY)

print ('email sent')

except:

print ('error sending mail')

server.quit()

#check if csv file exists or not, append if it does

if os.path.isfile(filename):

vpnusersfile = open(filename, 'a')

csvwriter = csv.writer(vpnusersfile)

else:

vpnusersfile = open(filename, 'w')

csvwriter = csv.writer(vpnusersfile)

header = ["Time", "VpnUsers", "PeakUsers"]

csvwriter.writerow(header)

#create and write entry, then close file

hrsandmins = str(time.strftime("%H:%M"))

new_row = [hrsandmins, vals, peak]

csvwriter.writerow(new_row)

vpnusersfile.close()However when trying to create a cron entry for the basic script, I ran into some error complaining about “No MTA installed, discarding output“. That turned out to be a classic, after some version of every linux OS, the cron entries need to be created with full paths and the script must use full paths in the code, as the concept of the current directory doesn’t follow your script if it’s run by cron. So the filename should be defined with something like this, or an error would be produced, which could not in turn be emailed to the root user (that’s what the error message means):

filename = "/opt/vpnalerts/vpnusers_"+timestr+".csv"So far I had found out how to get the data, send it over via email, write it to csv and quit. The base of this script could be used to cover the basic demands we had if we then had to create the graph by hand (in excel). So problems #1 and #2 were solved and this gained me some time to work on the rest. One thing to notice about the smtplib and especially the .sendmail method, is that it needs to have a list as the ‘TO’ argument. That is very important in order to understand the difficulties in solving problem #4. Where did I find the necessary information?

Sources for the first day

For the first part, and specifically for reading and writing csv files, you can never go wrong with RealPython:

The first one was also useful for solving problem #3. I knew that the csv library was not enough to help me create the graphs and the best suited candidate was the Pandas library, very popular with Data Scientists. I had played before with it when reading and writing data from/to excel files (when researching for this post) but this time I needed more. About the email I mostly relied on the following url and posts on Stack Overflow, but as it appears it wasn’t enough when I got to sending attachments: https://realpython.com/python-send-email/

The next day (Saturday the 21rst of March) we needed to go to the office for one last time before the lockdown for hardware replacement procedures, so the morning time was not available to continue working on this.

On the second day..

I picked up the work early in the afternoon. First I needed to understand a bit more about what a dataframe is in Pandas. It’s really a magic library! I only scratched the surface.

So it’s very easy to read data from csv files with pandas. What is needed in order to successfully create an excel graph, is to create a dataframe from the data, set an index for the dataframe and then let Pandas do it’s magic, while defining the details (colours, legends, etc). I started with Real Python’s document but then I needed to understand more:

- https://realpython.com/pandas-read-write-files/

- https://pandas-xlsxwriter-charts.readthedocs.io/chart_examples.html#line-chart

- https://github.com/jmcnamara/pandas_xlsxwriter_charts/tree/master/examples

So I built on the code from the previous day, and made a script that would collect the values from the .csv file, get them into a pandas dataframe using time as an index (that’s important so we can get the x-axis right). Then the code would create the excel sheet in memory and the graph to go with it, based on data from the dataframe, where the index was the first column (time) and the other two columns were the series to be graphed in the y-axis (current users and max). All of that is evident on the code snippet further below. The only trouble I had was figuring out where to set the index, so I did it while reading the .csv into the dataframe. The real problem came about later when I had to send the email as attachment to multiple recipients. It would only be sent to the first one. The posts I used were the one from Real Python plus these:

- https://docs.python.org/3/library/email.mime.html

- https://medium.com/hacktive-devs/send-a-mail-with-python-script-8b00ed0be295

But, like I said, it wasn’t enough. The problem was that I could not use the recipients as a list for the encoding process. In that process the code expected a string (value stored under the key “To”). When a string was used then the encoding would succeed and the message would be sent but only to the first recipient. The answer became evident when I took a look at the following url https://docs.python.org/3/library/email.examples.html . You see, no one will spell it out for you but here it is:

The encoding process needs a string for the recipients (it’s what you see when you open the message you get in Outlook) or it fails. But in order to tell the server where to send the email, when using the .sendmail() method, you stil need to use a list for it (didn’t I tell you already?). So the same recipients string needed to be split into list elements on the fly (observe the recipients.split(‘,’) argument when the method is used in the second snippet (correct code). So here is the initial script (that only sent the email to one recipient):

#!/usr/bin/python3

import sys

import smtplib, email

import time

import os

import pandas as pd

from email import encoders

from email.mime.base import MIMEBase

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

datestr = time.strftime("%Y%m%d")

sheet_date = time.strftime("%d-%m-%Y")

csvfilename = "/opt/vpnalerts/vpnusers_"+datestr+".csv"

excel_file = "/opt/vpnalerts/vpnusers_"+datestr+".xlsx"

sheet_name = "VPN Users {}".format(sheet_date)

df1 = pd.read_csv(csvfilename, index_col="Time")

# Create a Pandas Excel writer using XlsxWriter as the engine.

writer = pd.ExcelWriter(excel_file, engine='xlsxwriter')

df1.to_excel(writer, sheet_name= sheet_name)

# Access the XlsxWriter workbook and worksheet objects from the dataframe.

workbook = writer.book

worksheet = writer.sheets[sheet_name]

# Create a chart object.

chart = workbook.add_chart({'type': 'line'})

#chart = workbook.add_chart({'type': 'area', 'subtype': 'stacked'})

# Configure the series of the chart from the dataframe data.

for i in range(2):

col = i + 1

chart.add_series({

'name': [sheet_name, 0, col],

'categories': [sheet_name, 1, 0, df1.shape[0], 0],

'values': [sheet_name, 1, col, df1.shape[0], col],

})

chart.set_legend({'position': 'top'})

# Configure the chart axes.

chart.set_x_axis({'name': 'Time of Day'})

chart.set_y_axis({'name': 'Value', 'major_gridlines': {'visible': False}})

# Insert the chart into the worksheet.

worksheet.insert_chart('E2', chart)

# Close the Pandas Excel writer and output the Excel file.

writer.save()

recipients = "recipient1@domain,recipient2@domain,recipient3@domain"

subject = 'VPN users graph report for {}'.format(sheet_date)

bodytext= "This is the report in Excel Graph format for {} for VPN users and peak values per time of day. \n\nWith Compliments of the Network Section of the Institution".format(sheet_date)

# exchange Sign In

exchange_sender = 'exchange_user_address'

exchange_passwd = 'exchange_user_password'

message = MIMEMultipart()

message["From"] = exchange_sender

message["To"] = recipients

message["Subject"] = subject

#message["Bcc"] = recipients

message.attach(MIMEText(bodytext, "plain"))

# Open file in binary mode

with open(excel_file, "rb") as attachment:

# Add file as application/octet-stream

# Email client can usually download this automatically as attachment

part = MIMEBase("application", "octet-stream")

part.set_payload(attachment.read())

# Encode file in ASCII characters to send by email

encoders.encode_base64(part)

# Add header as key/value pair to attachment part

part.add_header(

"Content-Disposition",

f"attachment; filename= {excel_file}",

)

# Add attachment to message and convert message to string

message.attach(part)

text = message.as_string()

# Log in to server using secure context and send email

with smtplib.SMTP('SMTP_SERVER', SMTP_PORT) as server:

server.ehlo()

server.starttls()

server.login(exchange_sender, exchange_passwd)

try:

server.sendmail(exchange_sender, recipients, text)

print ('email sent')

if os.path.isfile(excel_file):

#os.remove(excel_file)

print(excel_file, "file deleted")

else:

print(f'Error: {excel_file} not a valid filename')

if os.path.isfile(csvfilename):

#os.remove(csvfilename)

print(csvfilename, "file deleted")

else:

print(f'Error: {csvfilename} not a valid filename')

except:

print ('error sending mail') I was already good with collecting the values and sending them over via email to our manager (one recipient was enough at that point). So I had time to research what I wanted to do from the start: Write the values to an influxDB time series and use that to create a Grafana panel. Fortunately Influx people have done a good job providing and documenting a python library for accessing InfluxDB via Python, plus I also found another post about using python with InfluxDB and Grafana:

- http://www.compassmentis.com/2016/06/grafana-influxdb-and-python-simple-sample/

- https://www.influxdata.com/blog/getting-started-python-influxdb/

- https://influxdb-python.readthedocs.io/en/latest/examples.html

- https://github.com/influxdata/influxdb-python

So I had a good starting point. I pulled a long night and an early morning..

On the third day..

Needless to say that knowing how to use the library is not enough if you want to do more than just writing points to an InfluxDB time series. You need perhaps to create the series on the InfluxDB server, define access rights etc. You can take a look at my 3 part series o the TIG stack for references (Part-1, Part-2, Part-3 is still under development) or take a look at the following links:

- https://grafana.com/docs/grafana/v6.6/features/datasources/influxdb/

- https://docs.influxdata.com/influxdb/v1.7/query_language/database_management/

- https://docs.influxdata.com/influxdb/v1.7/concepts/glossary/

- https://docs.influxdata.com/influxdb/v1.7/query_language/spec/

Lets try that one more time..

First came the alternative script that gathered the data. Instead of storing them to a .csv file, it stored them in an InfluxDB time series. The part where we connect to the gateway to get the data remains the same. For the rest, first we need to import the python library for influxdb

from influxdb import InfluxDBClientThen we get the current time in a string (watch that call closely, I will come back to it) and create the connector to the influxDB server, declaring a client:

timestr = str(time.strftime("%Y-%m-%dT%H:%M:%SZ", time.gmtime()))

client = InfluxDBClient(host='InfluxDB_Server', port=INFLUXDB_PORT, username='USER', password='PASSWORD')If the database does not exist on the influxDB server, it is created. If it does exist, the python client switches to it. The rest is pretty simple. We create a json payload that is written in the time series.

if 'vpnusers' not in client.get_list_database():

client.create_database('scripts')

client.switch_database('scripts')

json_data = [

{

"measurement": "vpnusers",

"tags": {

"host": hostname

},

"time": timestr,

"fields": {

"currentusers": int(vals),

"peakusers": int(peak)

}

}

]

result = client.write_points(json_data)

if result:

print("everything ok")

else:

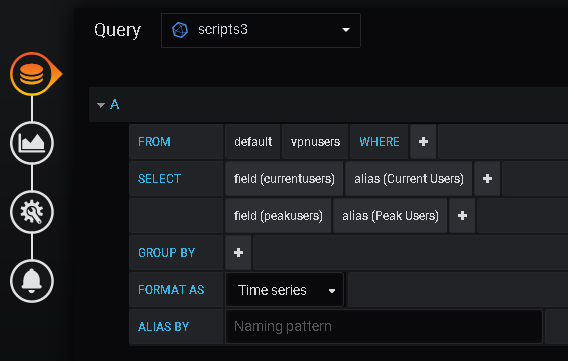

print("data not writen to influxDB")That took care of writing the data points to the InfluxDB server. When I was done I was anxious to see the data in Grafana, so I went ahead and declared a new datasource, a new dashboard and the appropriate variables (I won’t get into that, I took care to create a tag in the code above, which I used when declaring the variable ‘host’, not that necessary unless you get data from more hosts) and a new panel in the dashboard. The query for the panel is the following. If you needed to have the same panel for different hosts (gateways) then after ‘WHERE’ you would have to create a filter for the ‘host’ tag.

SELECT "currentusers" AS "Current Users", "peakusers" AS "Peak Users" FROM "vpnusers" WHERE $timeFilter

You also need to choose a line graph for it, add max, avg and current value tags, plus other standard stuff. Take a look at my TIG stack series, you can find more sources on how to build simple panels. The result should have been the graph you see on the image at the start of the post. But I wasn’t getting any data in the graph, although they were there in the time series.. so I was baffled ..

After a little research and asking around (twitter), plus the fact that I started getting data in the graph after 2-3 hours, I realized and then verified an important piece of information: Grafana reads data in GM time and then diplays it in local browser time (that is actually an option in the Grafana GUI). So I had to store the data in GM time in the first place. I quickly wrote a script to modify the values I had already in the time series and then edited my script code to get GM time (that’s what time.gmtime() was for). For creating the email report we no longer had to use .csv files. We only had to get data from indluxDB itself with a query and then create the report and send the file.

In the initial setup, a cron job called the script to store data in the .csv every five minutes, another script would get the data and create the excel sheet with the graph and a third cron job would delete the .csv a little before midnight. When the new day would start, a new .csv would be created and so on.

In the new setup, the data collection was done by the modified script every five minutes and stored in InfluxDB, then the script below would be executed once per day to create the excel report to send on email at the end of the day. No .csv file created so delete cron job either.

The query asks for data that goes back one day and gets data in a list of dictionaries. Since the time data is in GM time the script must adapt the values to local time (or the report would not make much sense to the management), depending on time zone and daylight saving time (there are harcoded values for my timezone in the script, sorry I didn’t research that part, you can perhaps do it yourselves?). In order to adapt the time values the points need to be added to a new list because the data from the query response are not editable (I obviously tried that first).

The code then creates the dataframe from the list of dictionaries, but we do not forget to set the index to the right key (‘Time”) already in the dataframe. The rest of the code is almost the same as before, except the use of the split(‘,’) method on the recipients string in order to pass a list as argument to the .sendmail() method. Here is the script:

#!/usr/bin/python3

from influxdb import InfluxDBClient

import sys

import smtplib, email

import time

import os

import pandas as pd

from email import encoders

from email.mime.base import MIMEBase

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

#define the client for connection to influxDB

client = InfluxDBClient(host='InfluxDB_Server', port=INFLUXDB_PORT, username='USER', password='PASSWORD')

#choose database (it should be there, or you should exit, think of defining an exit point here)

client.switch_database('scripts')

#define query for fields and 24 hours, store query in result object

query = "select currentusers, peakusers from vpnusers where time > now() - 1d;"

#print("Querying data: " + query)

result = client.query(query)

#check whether we were able to read the data from influxDB (if not we should probably exit with error)

if result:

print("everything ok")

else:

print("data not read from influxDB")

#get a handle for query points from result object in a list of dicts. Not editable (unmutable)

points = result.get_points()

newdata = []

for point in points:

newdata_point = dict()

initial = point['time']

split1 = initial.split('T')

split2 = split1[1].split(':')

if int(split2[0]) < 21:

hours = str(int(split2[0]) + 3)

else:

hours = str(int(split2[0]) + 3 - 24)

mins = split2[1]

final = hours+":"+mins

newdata_point['time'] = final

newdata_point['currentusers'] = point["currentusers"]

newdata_point['peakusers'] = point['peakusers']

newdata.append(newdata_point)

#create the dataframe from the points

df = pd.DataFrame(newdata)

#set the index and remove as column

df.set_index('time', inplace=True)

#create names from date and time

sheet_date = time.strftime("%d-%m-%YT%H-%M")

excel_file = "vpnusers_"+sheet_date+".xlsx"

sheet_name = "VPN Users {}".format(sheet_date)

# Create a Pandas Excel writer using XlsxWriter as the engine.

writer = pd.ExcelWriter(excel_file, engine='xlsxwriter')

df.to_excel(writer, sheet_name= sheet_name)

# Access the XlsxWriter workbook and worksheet objects from the dataframe.

workbook = writer.book

worksheet = writer.sheets[sheet_name]

# Create a chart object.

chart = workbook.add_chart({'type': 'line'})

#chart = workbook.add_chart({'type': 'area', 'subtype': 'stacked'})

# Configure the series of the chart from the dataframe data.

for i in range(2):

col = i + 1

chart.add_series({

'name': [sheet_name, 0, col],

'categories': [sheet_name, 1, 0, df.shape[0], 0],

'values': [sheet_name, 1, col, df.shape[0], col],

})

chart.set_legend({'position': 'top'})

# Configure the chart axes.

chart.set_x_axis({'name': 'Time of Day'})

chart.set_y_axis({'name': 'Value', 'major_gridlines': {'visible': False}})

# Insert the chart into the worksheet.

worksheet.insert_chart('F2', chart)

# Close the Pandas Excel writer and output the Excel file.

writer.save()

recipients = "recipient1@domain,recipient2@domain,recipient3@domain"

subject = 'VPN users graph report for {}'.format(sheet_date)

bodytext= "This is the report in Excel Graph format for {} for VPN users and peak values per time of day. \n\nWith Compliments of the Network Section of Institution".format(sheet_date)

# exchange Sign In

exchange_sender = 'exchange_user_address'

exchange_passwd = 'exchange_user_password'

message = MIMEMultipart()

message["From"] = exchange_sender

message["To"] = ""

message["Subject"] = subject

message["Bcc"] = recipients

message.attach(MIMEText(bodytext, "plain"))

# Open file in binary mode

with open(excel_file, "rb") as attachment:

# Add file as application/octet-stream

# Email client can usually download this automatically as attachment

part = MIMEBase("application", "octet-stream")

part.set_payload(attachment.read())

# Encode file in ASCII characters to send by email

encoders.encode_base64(part)

# Add header as key/value pair to attachment part

part.add_header(

"Content-Disposition",

f"attachment; filename= {excel_file}",

)

# Add attachment to message and convert message to string

message.attach(part)

text = message.as_string()

# Log in to server using secure context and send email

with smtplib.SMTP('smtp-mk.bankofgreece.gr', 25) as server:

server.ehlo()

server.starttls()

server.login(exchange_sender, exchange_passwd)

try:

server.sendmail(exchange_sender, recipients.split(','), text)

print ('email sent')

if os.path.isfile(excel_file):

os.remove(excel_file)

print(excel_file, "file deleted")

else:

print(f'Error: {excel_file} not a valid filename')

except:

print ('error sending mail')eh.. wait, what about that Nagios Plugin?

Right! I almost forgot about that.. Well, the specification for the Nagios Plugin defines exactly what it should return as exit codes and how to pass back values as perf data. I also had integrated a couple of python based plugins in our Nagios Infrastructure so I decided to take a look at those. You can find information for what I mentioned, here:

- https://assets.nagios.com/downloads/nagioscore/docs/nagioscore/4/en/pluginapi.html – Nagios 4.x spec for the plugin API

- https://nagios-plugins.org/doc/guidelines.html – General guidelines

- https://github.com/mhoogveld/check_cisco_ip_sla – Python based script created by mhoogveld (sorry could not find additional details about the creator)

Let me remind you that Nagios plugins can be used on a group of “compatible” software products like Nagios Core, Nagios XI, Icinga, OP5, etc. So based on a similar structure as the check ip sla plugin, this one checks for the vpn user count and reports back exit code, message and perf_data. The code is prettier here as I chose to maintain a similar class structure that mhoogveld used for his plugin.

I have to warn you again.. I did not have the time to upgrade the OS on our main Nagios server or install the NRPE server software on the automation server in order to check the plugin in production. I think it will work judging from my tests, you will just have to test it yourself. Besides that, there is really no point right now in getting notified for a threshold triggered except maybe for knowing when a record is reached, as the FW infrastructure will not have any problems with the current VPN user count (regardless of the concern expressed by the management). Besides that, I could also define an alert in Grafana! (I will leave that for my TIG stack series).

So here is the Nagios plugin python code, it’s under MIT license like the rest of the code, have fun with it if you want to! Gateway hostname, user, password and threshold values are passed as arguments, exactly as you would do with any other Nagios plugin :

#!/usr/bin/python

"""

This plugin can get the number of vpn users from a check point gaia gateway.

That number is checked against the number of warning and critical level users.

If the number is lower than the warning level number, an OK status is returned

If the number is higher than warning but lower than critical, a WARNING status is returned.

If the number is higher than critical, a CRITICAL status is returned.

In any case, the number of users is also returned.

"""

from netmiko import ConnectHandler, ssh_exception

from paramiko.ssh_exception import SSHException

#from getpass import getpass

import subprocess

import argparse

__author__ = "my name"

__version__ = "1.0"

__email__ = "my email address"

__licence__ = "MIT"

__status__ = "Production"

class VpnUserChecker:

STATUS_OK = 0

STATUS_WARNING = 1

STATUS_CRITICAL = 2

STATUS_UNKNOWN = 3

def __init__(self):

self.status = None

self.messages = []

self.perfdata = []

self.options = None

self.vpnusers = 0

def run(self):

self.parse_options()

self.get_vpn_users()

self.compare_users()

self.print_output()

return self.status

def parse_options(self):

parser = argparse.ArgumentParser(

description="Monitoring check plugin to check number of Check Point Gaia VPN users."

"If the number is above warning level, the status is raised to WARNING. "

"If the number is above critical level, the status is CRITICAL."

"UNKNOWN is returned if there is a failure."

)

parser.add_argument("-H", "--hostname",

type=str, help="Hostname or ip-address")

parser.add_argument("-u", "--user",

type=str, help="Gaia user name")

parser.add_argument("-p", "--password",

type=str, help="Gaia user password")

parser.add_argument("-w", "--warning",

type=int, help="Warning level for number of VPN users")

parser.add_argument("-c", "--critical",

type=int, help="Critical level for number of VPN users")

self.options = parser.parse_args()

if not self.are_options_valid():

print("Run with --help for usage information")

print("")

exit(0)

def are_options_valid(self):

if not self.options.hostname:

print("You must specify a hostname")

return False

if not self.options.user:

print("You must specify a user name")

return False

if not self.options.password:

print("You must specify a user password")

return False

if not self.options.warning:

print("You must specify a valid number of users for warning level")

return False

if not self.options.critical:

print("You must specify a valid number of users for critical level")

return False

if self.options.critical <= self.options.warning:

print("warning number of users must be less than critical number of users")

return False

return True

def print_output(self):

""" Prints the final output (in Nagios plugin format if self.status is set)

:return:

"""

output = ""

if self.status == self.STATUS_OK:

output = "OK"

elif self.status == self.STATUS_WARNING:

output = "Warning"

elif self.status == self.STATUS_CRITICAL:

output = "Critical"

elif self.status == self.STATUS_UNKNOWN:

output = "Unknown"

if self.messages:

if len(output):

output += " - "

# Join messages like sentences. Correct those messages which already ended with a period or a newline.

output += ". ".join(self.messages).replace(".. ", ".").replace("\n. ", "\n")

if self.perfdata:

if len(output):

output += " | "

output += " ".join(self.perfdata)

print(output)

def get_vpn_users(self):

#user = input('username:')

#passwd = getpass()

fwext = {

'device_type': 'checkpoint_gaia',

'ip': self.options.hostname,

'username': self.options.user,

'password': self.options.password,

}

try:

net_connect = ConnectHandler(**fwext)

except SSHException as e:#replace with netmiko exception

#self.add_status(self.STATUS_UNKNOWN)

print("can't connect to device {}, {}".format(self.options.hostname, e))

sys.exit(1)

except ssh_exception.NetMikoTimeoutException as e:

#self.add_status(self.STATUS_UNKNOWN)

print("Timeout for device {}, {}".format(self.options.hostname, e))

sys.exit(1)

except ssh_exception.NetMikoAuthenticationException as e:

#self.add_status(self.STATUS_UNKNOWN)

print("Invalid Credentials for device {}, {}".format(self.options.hostname, e))

sys.exit(1)

output = net_connect.send_command("fw tab -t userc_users -s")

lines = output.split('\n')

for line in lines:

if 'NAME' in line:

continue

vars = line.split()

vals = vars[3]

peak = vars[4]

self.vpnusers = int(vals)

#print ("Current Remote Users: ",vals)

#print ("Peak number of users:", peak)

self.set_message("VPN Users: {}".format(vals))

self.add_perfdata("'VPN_Users'= {}".format(vals))

net_connect.disconnect()

def add_status(self, status):

""" Set the status only if it is more severe than the present status

The order of severity being OK, WARNING, CRITICAL, UNKNOWN

:param status: Status to set, one of the self.STATUS_xxx constants

:return: The current status

"""

if self.status is None or status > self.status:

self.status = status

def set_message(self, message):

self.messages = [message]

def add_message(self, message):

self.messages.append(message)

def add_perfdata(self, perfitem):

self.perfdata.append(perfitem)

def compare_users(self):

if self.vpnusers < self.options.warning:

self.status = self.STATUS_OK

elif self.vpnusers < self.options.critical:

self.status = self.STATUS_WARNING

else:

self.status = self.STATUS_CRITICAL

if __name__ == "__main__":

checker = VpnUserChecker()

result = checker.run()

exit(result)Conclusion

So by Sunday afternoon we had a working solution and by Monday morning we could report about the VPN user count using Grafana graphs. We created the final emails by hand, I am afraid my python knowledge stops there at the time being. If one could generate reports from Grafana by leveraging the Grafana api or a Python SDK, that would be grand, wouldn’t it? Also, Grafana pdf reports are possible with Grafana Enterprise (which we are not subscribed to yet).

So job done, congrats for the network admin team, plus I learned a lot of things that I can use in my next projects and share with my workmate (and the community now through this post). If you have any remarks or suggestions, look me up on Twitter: @mythryll. Don’t forget I will publish polished versions on Github eventually, so keep an eye for them and please be lenient, I am not a developer! Have fun!